“Further progress lies in the direction of making our equations invariant under wider and still wider transformations.”

These prophetic lines were written in 1930 by P. A. M. Dirac in his famous book “The Principles of Quantum Mechanics”. In the following centuries, tremendous progress was made exactly as he predicted.

Weak interactions were described perfectly using $SU(2)$ symmetry, strong interactions using $SU(3)$ symmetry and it is well known that electrodynamics can be derived from $U(1)$ symmetry. Other aspects of elementary particles, like their spin, can be understood using the symmetry of special relativity.

A symmetry is a transformation that leaves our equations invariant, i.e. that does not change the equations. A set of symmetry transformations is called a group and, for example, the set of transformations that leaves the equations of special relativity invariant is called the Poincare group.

By making our equations invariant under the quite large set of transformations:

$$ \text{Poincare Group} \times U(1) \times SU(2) \times SU(3) , $$

we are able to describe all known interactions of elementary particles, except for gravity. This symmetry is the core of the standard model of modern physics, which is approximately 40 years old. Since then it has been confirmed many times, for example, through the discovery of the Higgs boson. Just as Dirac predicted, we gained incredible insights into the inner workings of nature, by making the symmetry of our equations larger and larger.

Unfortunately, since the completion of the standard model $\sim 40$ years ago, there was no further progress in this direction. No further symmetry of nature was revealed by experiments. (At least that’s the standard view, but I don’t think it’s true. More on that later). In 2017 our equations are still simply invariant under $ \text{Poincare Group} \times U(1) \times SU(2) \times SU(3) , $ but no larger symmetry.

I’m a big believer in Dirac’s mantra. Despite the lack of new experimental insights, I do think there are many great ideas for how symmetries could guide us towards the correct theory beyond the standard model.

Before we can discuss some of these ideas, there is one additional thing that should be noted. Although the four groups $ \text{Poincare Group} \times U(1) \times SU(2) \times SU(3) $ are written equally next to each other, they aren’t treated equally in the standard model. The Poincare group is a spacetime symmetry, whereas all other groups describe inner symmetries of quantum fields. Therefore, we must divide the quest for a larger symmetry into two parts. On the one hand, we can enlarge the spacetime symmetry and on the other hand, we can enlarge the inner symmetry. In addition to these two approaches, we can also try to treat the symmetries equally and enlarge them at the same time.

Let’s start with the spacetime symmetry.

Enlargement of the Spacetime Symmetry

The symmetry group of special relativity is the set of transformations that describe transformations between inertial frames of reference and leave the speed of light invariant. As already noted, this set of transformations is called the Poincare group.

Before Einstein discovered special relativity, people used a spacetime symmetry that is called the Galilean group. The Galilean group also describes transformations between inertial frames of reference but does not care about the speed of light.

The effects of special relativity are only important for objects that are moving fast. For everything that moves slowly compared to the speed of light, the Galilean group is sufficient. The Galilean group is an approximate symmetry when objects move slowly. Mathematically this means that the Galilean group is the contraction of the Poincare group in the limit where the speed of light goes to infinity. For an infinite speed of light, nothing can move with a speed close to the speed of light and thus the Galilean group would be the correct symmetry group.

It is natural to wonder if the Poincare group is an approximate symmetry, too.

One hint in this direction is that the Poincare group is an “ugly” group. The Poincare group is the semi-direct product of the group of translations and the Lorentz group, which described rotations and boosts. Therefore the Poincare group, not a simple group. The simple groups are the “atoms of groups” that can be used to construct all other groups from. However, the spacetime symmetry group that we use in the standard model is not one of these truly fundamental groups.

Already in 1967, Monique Levy‐Nahas studied the question which groups could yield the Poincare group as a limit, analogous to how the Poincare group yields the Galilean group as a limit.

The answer she found was stunningly simple: “the only groups which can be contracted in the Poincaré group are $SO(4, 1)$ and $SO(3, 2)$”. These groups are called the de Sitter and the anti-de Sitter group.

They consist of transformations that describe transformations between inertial frames of reference, leave the speed of light invariant and leave additionally an energy scale invariant. The de Sitter group leaves a positive energy scale invariant, whereas the anti deSitter group leaves a negative energy scale invariant. Both contract to the Poincare group in the limit where the invariant energy scale goes to zero.

Levy‐Nahas’ discovery is great news. There isn’t some large pool of symmetries that we can choose from, but only two. In addition, the groups she found are simple groups and therefore much “prettier” than the Poincare group.

Following Dirac’s mantra and remembering the fact that the deformation: Galilean Group $\to $ Poincare Group led to incredible progress, we should take the idea of replacing the Poincare group with the de Sitter or anti de Sitter group seriously. This point was already emphasized in 1972 by Freeman J. Dyson in his famous talk “Missed opportunities”.

Nevertheless, I didn’t hear about the de Sitter groups in any particle physics lecture or read about them in any particle physics book. Maybe because the de Sitter symmetry is not a symmetry of nature? Because there is no experimental evidence?

To answer these questions, we must first answer the question: what is the energy scale that is left invariant?

The answer is: it’s the cosmological constant!

The present experimental status is that the cosmological constant is tiny but nonzero and positive: $\Lambda \approx 10^{-12}$ eV! This smallness explains why the Poincare group works so well. Nevertheless, the correct spacetime symmetry group is the de Sitter group. I’m a bit confused why this isn’t mentioned in the textbooks or lectures. If you have an idea, please let me know!

Can we enlarge the spacetime symmetry even further?

Yes, we can. But as we know from Levy‐Nahas’ paper, only a different kind of symmetry enlargement is possible. There isn’t any other symmetry that could be more exact and yield the de Sitter group in some limit. Instead, we can think about the question, if there could be a larger broken spacetime symmetry.

Nowadays the idea of a broken symmetry is well known and already an important part of the standard model. In the standard model, the Higgs field triggers the breaking $SU(2) \times U(1) \to U(1)$.

Something similar could’ve been happened to a spacetime symmetry in the early universe. A good candidate for such a broken spacetime symmetry is the conformal group $SO(4,2)$.

The temperature in the early universe was incredibly high and “[i]t is an old idea in particle physics that, in some sense, at sufficiently high energies the masses of the elementary particles should become unimportant” (Sidney Coleman in Aspects of Symmetry). In the massless limit, our equations become invariant under the conformal group (source). The de Sitter group and the Poincare group are subgroups of the conformal group. Therefore it is possible that the conformal group was broken to the de Sitter group in the early universe.

This idea is interesting for a different reason, too. The only parameter in the standard model that breaks conformal symmetry at tree level is the Higgs mass parameter. This parameter is the most problematic aspect of the standard model and possibly the Higgs mass fine-tuning problem can be solved with the help of the conformal group. (See: On naturalness in the standard model by William A. Bardeen.)

Enlargement of the Inner Symmetry

The inner symmetry group of the standard model $ U(1) \times SU(2) \times SU(3) $ is quite ugly, too. Like the Poincare group, it is not a simple group.

There is an old idea by Howard Georgi and Sheldon Glashow that instead of $ U(1) \times SU(2) \times SU(3) $ we use a larger, simple group $G_{GUT} $. These kinds of theories are called Grand Unified Theories (GUTs).

While GUTs have problems, they are certainly beautiful. On obvious “problem” is that in present-day colliders, we do not observe effects of a $G_{GUT}$ structure and thus we assume the unified gauge symmetry is broken at some high energy scale:

\begin{equation} \label{eq:schematicgutbreaking}

G_{GUT} \stackrel{M_{GUT}}{\rightarrow} \ldots \stackrel{M_I}{\rightarrow} G_{SM} \stackrel{M_Z}{\rightarrow} SU(3)_C \times U(1)_Q \, ,

\end{equation}

where the dots indicate possible intermediate scales between $G_{GUT}$ and $G_{SM}$. In the following, we discuss some of the “mysteries” of the standard model that can be resolved by a GUT.

Quantization of Electric Charge

In the standard model the electric charges of the various particles must be put in by hand and there is no reason why there should be any relation between the electron and proton charge. However from experiments it is known that $Q_{\text{proton}}+Q_{\text{electron}}= \mathcal{O}(10^{-20})$. In GUTs one multiplet of $G_{GUT}$ contains quarks and leptons. This way, GUTs provide an elegant explanation for the experimental fact of charge quantization. For example in $SU(5)$ GUTs the conjugate $5$-dimensional representation contains the down quark and the lepton doublet

\begin{equation}

\bar{5} = \begin{pmatrix} \nu_L \\ e_L \\ (d_R^c)_{\text{red}} \\ (d_R^c)_{\text{blue}} \, .\\ (d_R^c)_{\text{green}} \end{pmatrix}

\end{equation}

The standard model generators must correspond to generators of $G_{GUT}$. Thus the electric charge generator must correspond to one Cartan generator of $G_{GUT}$ (The eigenvalues of the Cartan generators of a given gauge group correspond to the quantum numbers commonly used in particle physics.). In $SU(5)$ the Cartan generators can be written as diagonal $5\times 5$ matrices with trace zero. (In $SU(5)$ is the set of $5 \times 5$ matrices $U$ with determinant $1$ that fulfil $U^\dagger U = 1$. For the generators $T_a$ this means $\text{det}(e^{i \alpha_a T_a})=e^{i \alpha_a \text{Tr}(T_a)} \stackrel{!}{=}1$. Therefore $Tr(T_a) \stackrel{!}{=} 0$) Therefore we have

\begin{align}

\text{Tr}(Q)&= \text{Tr} \begin{pmatrix} Q(\nu_L) & 0 & 0 & 0 &0 \\ 0 & Q(e_L) & 0 & 0 &0 \\ 0 & 0 & Q((d_R^c)_{\text{red}}) & 0 &0\\ 0 & 0 & 0 & Q((d_R^c)_{\text{blue}})&0\\ 0 & 0 & 0 & 0 &Q((d_R^c)_{\text{green}}) \end{pmatrix} \stackrel{!}{=} 0 \notag \\

&\rightarrow Q(\nu_L) + Q(e_L) + 3Q(d_R^c) \stackrel{!}{=} 0 \notag \\

&\rightarrow Q(d_R^c) \stackrel{!}{=} -\frac{1}{3} Q(e_L) \, .

\end{align}

Analogously, we can derive a relation between $e_R^c$, $u_L$ and $u_R^c$. Thus $Q_{\text{proton}}+Q_{\text{electron}}= \mathcal{O}(10^{-20})$ is no longer a miracle, but rather a direct consequence of of the embedding of $G_{SM}$ in an enlarged gauge symmetry.

Coupling Strengths

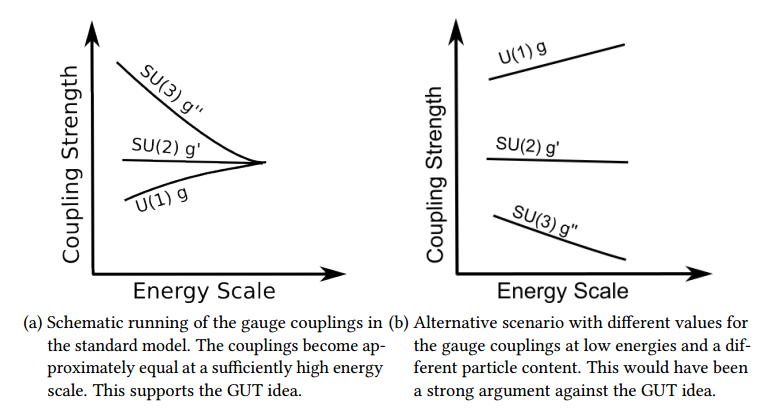

The standard model contains three gauge couplings, which are very different in strength. Again, this is not a real problem of the standard model, because we can simply put these values in by hand. However, GUTs provide a beautiful explanation for this difference in strength. A simple group $G_{GUT}$ implies that we have only one gauge coupling as long as $G_{GUT}$ is unbroken. The gauge symmetry $G_{GUT}$ is broken at some high energy scale in the early universe. Afterward, we have three distinct gauge couplings with approximately equal strength. The gauge couplings are not constant but depend on the energy scale. This is described by the renormalization group equations (RGEs). The RGEs for a gauge coupling depends on the number of particles that carry the corresponding charge. Gauge bosons have the effect that a given gauge coupling becomes stronger at lower energies and fermions have the opposite effect. The adjoint of $SU(3)$ is $8$-dimensional and therefore we have $8$ corresponding gauge bosons. In contrast, the adjoint of $SU(2)$ is $3$-dimensional and thus we have $3$ gauge bosons. For $U(1)$ there is only one gauge boson. As a result for $SU(3)$ the gauge boson effect dominates and the corresponding gauge coupling becomes stronger at lower energies. For $SU(2)$ the fermion and boson effect almost cancel each other and thus the corresponding gauge coupling is approximately constant. For $U(1)$ the fermions dominate and the $U(1)$ gauge coupling becomes much weaker at low energies. This is shown schematically in the figure below. This way GUTs provide an explanation why strong interactions are strong and weak interactions are weak.

Another interesting aspect of the renormalization group evolution of the gauge couplings is that there is a close between the GUT scale and the proton lifetime. Thus proton decay experiments yield directly a bound on the GUT scale $M_{GUT} \gtrsim

10^{15}$ GeV. On the other hand, we can use the measured values of the gauge couplings and the standard model particle content to calculate how the three standard model gauge couplings change with energy. Thus we can approximate the GUT scale as the energy scale at which the couplings become approximately equal. The exact scale depends on the details of the GUT model, but the general result is a very high scale, which is surprisingly close to the value from proton decay experiments. This is not a foregone conclusion. With a different particle content or different measured values of the gauge coupling, this calculation could yield a much lower scale and this would be a strong argument against GUTs. In addition, the gauge couplings could run in the “wrong direction” as shown in the figure. The fact that the gauge coupling run sufficiently slow and become approximately equal at high energies are therefore hints in favor of the GUT idea.

Further Postdictions

In addition to the “classical” GUT postdictions described in the last two sections, I want to mention two additional postdictions:

- A quite generic implication of grand unification small neutrino masses through the type-1 seesaw mechanism. Models based on the popular $SO(10)$ or $E_6$ groups contain automatically a right-handed neutrino $\nu_R$. As a result of the breaking chain this standard model singlet $\nu_R$ gets a superheavy mass $M$. After the last breaking step $G_{SM}\rightarrow SU(3)_C \times U(1)_Y$ the right-handed and left-handed neutrinos mix. This yields a suppressed mass of the left-handed neutrino of order $\frac{m^2}{M}$, where $m$ denotes a typical standard model mass.

- GUTs provide a natural framework to explain the observed matter-antimatter asymmetry in the universe. As already noted above a general implication of GUTs is that protons are no longer stable. Formulated differently, GUTs allow baryon number-violating interactions. This is one of three central ingredients, known as Sakharov condition, needed to produce more baryons than antibaryons in the early universe. Thus, as D. V. Nanopoulos put it, “if the proton was stable it would not exist”.

What’s next?

While the unification of spacetime symmetries was already confirmed by the measurement of the cosmological constant, so far, there is no experimental evidence for the correctness of the GUT idea. Thus the unification of internal symmetries still has to wait. However, proton decay could be detected anytime soon. When Hyper-Kamiokande will start operating the limits on proton lifetime will become one order of magnitude better and this means there is a realistic chance that we finally find evidence for Grand Unification.

This, however, would by no means be the end of the road.

Arguably, it would be awesome if we could unify spacetime and internal symmetries into one large symmetry. However, there is one no-go theorem that blocked progress in this direction: the famous Coleman-Mandula theorem.

Nevertheless, a no-go theorem in physics never really means that something is impossible, only that it isn’t as trivial as one might think. There are several loopholes in the theorem, that potentially allow the unification of spacetime and internal symmetries.

At least to m, it seems as Dirac was right and larger symmetries is the way to go. However, so far, we don’t know which way we should follow.